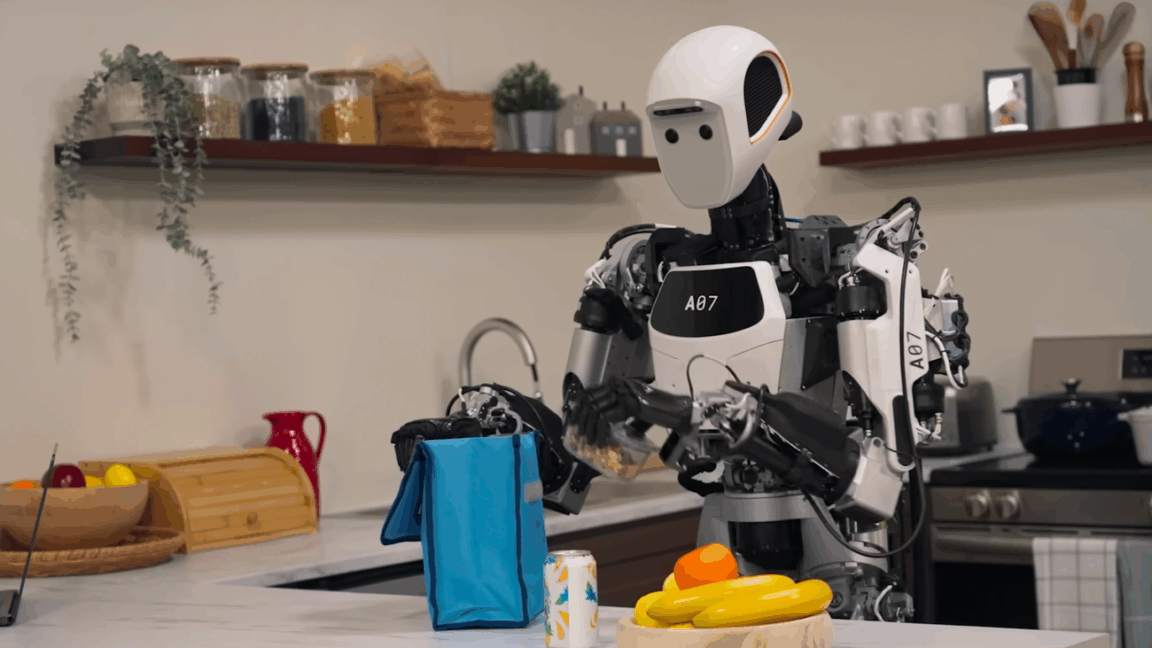

Google releases a new Gemini Robotics On-Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations (Ryan Whitwam/Ars Technica)

Ryan Whitwam / Ars Technica: Google releases a new Gemini Robotics On-Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations — We sometimes call chatbots like Gemini and ChatGPT “robots,” but generative AI is also playing a growing role in real, physical robots.

![]() Ryan Whitwam / Ars Technica:

Ryan Whitwam / Ars Technica:

Google releases a new Gemini Robotics On-Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations — We sometimes call chatbots like Gemini and ChatGPT “robots,” but generative AI is also playing a growing role in real, physical robots.

This article has been sourced from various publicly available news platforms around the world. All intellectual property rights remain with the original publishers and authors. Unshared News does not claim ownership of the content and provides it solely for informational and educational purposes voluntarily. If you are the rightful owner and believe this content has been used improperly, please contact us for prompt removal or correction.